Introduction

In this blog post I will go over how to build a simple linear SVM model for the Kaggle dataset using python. The aim here is just to document the process to better understand what is required to get a simple model up and running rather then obtain the best possible model.

I have chosen to use the Linear Support Vector Machine classifier based on the “Choosing the right estimator” guide within the scikit-learn documentation. This is primarily because the Kaggle dataset is quite small.

Module Imports

from sklearn.svm import LinearSVC

from sklearn.model_selection import train_test_split

from sklearn.impute import SimpleImputer

from sklearn.preprocessing import StandardScaler

- LinearSVC is the support vector machine classifier from scikit-learn.

- train_test_split is a tool used to easy splitting data used in model building into training and testing sets.

- SimpleImputer is a tool used to fill in missing values with an appropriate value. In this code we fill in missing data with the average value. Treating the missing data this way means we don’t have to lose complete rows of data where some column values may be incomplete.

- StandardScaler is used to scale all the data to the same order of magnitude, typically between -1 and 1. This is for some algorithms to function properly as they do not automatically scale the data. Typically bad scaling of problems results in poor numerical performance in the optimisation algorithms used build machine learning models.

Data Preprocessing

The first thing I needed to do was to remove any weird data points. In this case the only one I chose to remove was the row that corresponded to cabin T as there was only one record of this and I am not sure if it would be present in the testing data.

Now the next step was to choose which columns to use to build a model. For this example I am choosing passenger sex, ticket class and type of cabin based on my previous simple analysis.

Encoding

As these are all categorical variables they need to be encoded. This is achieved using the pandas get_dummies() function.

categorical_columns = ['Cabin', 'Sex', 'Pclass']

X_data = pd.get_dummies(X_data, drop_first=False, columns=categorical_columns)

Encoding categorical variables in this way means that scale effects can be ruled out when building models. The next step is to remove one of categorical value from each category. This is because you can always infer the last category if you know N-1 categories e.g. If a ball could be two colours such as red or green, then you would only need to know if the ball was red (True) or not red (False) to know if it is not green or green.

columns_to_drop = ['Sex_male', 'Cabin_A', 'Pclass_1']

X_data = X_data.drop(columns_to_drop, axis=1)

X_data_kaggle = X_data_kaggle.drop(columns_to_drop, axis=1)

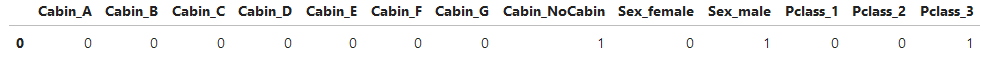

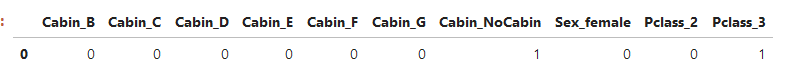

X_data.head(1)

I have manually chosen which columns to drop for repeatability with the Kaggle Test data.

Dealing with missing values

Missing values are handled using the Imputer command as mentioned before. A range of options can be specified for how to fill in the missing values, for this example I am just choosing to use the mean.

imputer = SimpleImputer(missing_values=np.nan, strategy='mean')

imputer.fit(X_data)

X_data = imputer.transform(X_data)

X_data_kaggle = imputer.transform(X_data_kaggle)

The imputer is used by first creating a SimpleImputer object. The fit command is used to determine the fitting parameters to use using the training dataset (in this case X_data). The transform command is used to actually fill in the missing values with the “fitted” means from the training data. The transform command also needs to be applied to the testing data (X_data_kaggle) using the same fitting parameters.

Scaling the data

scaler = StandardScaler()

processed_X_data = scaler.fit_transform(X_data)

processed_X_data_kaggle = scaler.transform(X_data_kaggle)

In a similar fashion to using the Imputer a scaler object is used to scale the x data. The fit_transform() function does the fit and transform to the training set in one command as it is a commonly used set of instructions. The same type of scaling needs to be applied to the testing data using the same scaler object.

Training the LinearSVM model

classifier = LinearSVC(max_iter = 100000)

classifier.fit(processed_X_data, Y_data)

y_pred_kaggle = classifier.predict(X_data_kaggle)

- First a LinearSVC classifier object needs to be created. Then the X and Y data are used to fit the model using the fit() function on the classifier. To predict the Kaggle Test set the predict() function is called with the X_data_kaggle as an argument. This is really simple to make a model! The next step is to get it into a CSV format for uploading to Kaggle. The easiest way I found to do this was to load the predictions into a Pandas dataframe and use the to_csv() method to dump the data out:

kaggle_final_df = pd.DataFrame({'PassengerID':titanic_testing_data.PassengerId,'Survived':y_pred_kaggle})

kaggle_finl_df.to_csv('linar_SVM_prediction_sex_cabin_class.csv', index=False)

Using this model a Kaggle score of 0.75598 was achieved which is not too bad for a first attempt. To improve this score I could look at the following:

- Using Pipelines to compare several models e.g. random forests, neural networks etc.

- Extracting more data out of the feature columns as well as better treatment of missing values

- Conducting more sophisticated data analysis such as clustering using principal component analysis or other tools

Concluding remarks

Overall I found the Kaggle exercise quite enjoyable. I definitely refreshed refreshed my skills in using Pandas and a lot of the SciKit learn toolkit.

On a personal note I did find that I was losing interest in this dataset after playing around with it for a reasonable amount of time. I am not sure if this is because the dataset itself is not real or I just lost some interest over time as I thought of other projects to work on. For anyone considering the challenge I would recommend they give it a go.